How Dentists Can Track Dental Marketing ROI by Channel and Source

Posted on 1/11/2026 by WEO Media |

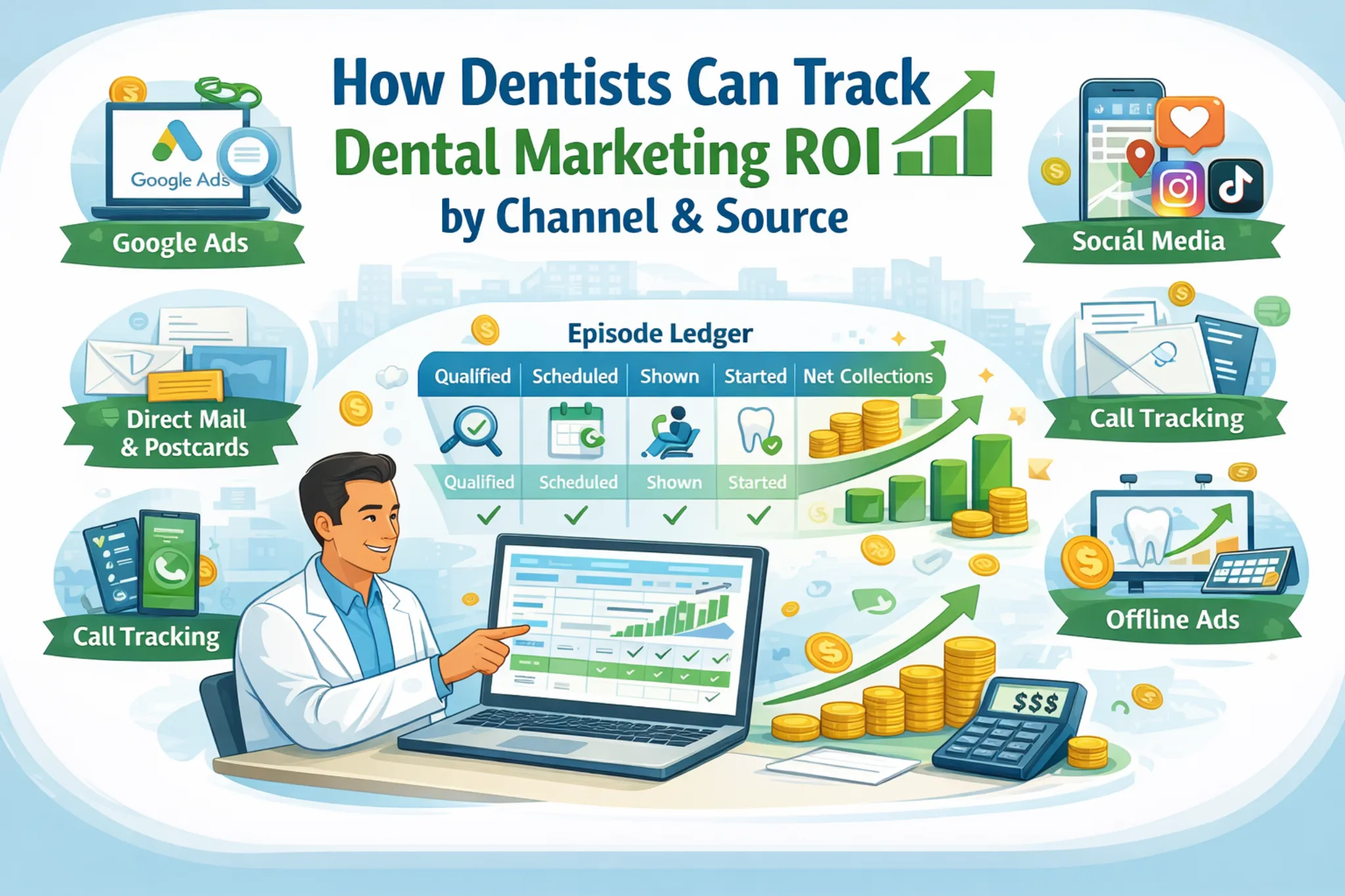

This guide from the dental analytics team at WEO Media - Dental Marketing explains how to connect marketing performance to real operational outcomes using a stable decision layer built around an episode ledger and an outcomes ladder (qualified → scheduled → shown → started → net collections). In this guide, “episode ledger” and “lead episodes” mean the same thing: a person-and-intent record that prevents double-counting. This guide from the dental analytics team at WEO Media - Dental Marketing explains how to connect marketing performance to real operational outcomes using a stable decision layer built around an episode ledger and an outcomes ladder (qualified → scheduled → shown → started → net collections). In this guide, “episode ledger” and “lead episodes” mean the same thing: a person-and-intent record that prevents double-counting.

Dental marketing ROI tracking is the process of labeling each inquiry with a consistent channel and source, then following that inquiry through qualified → scheduled → shown → started → net collections. The most defensible approach uses lead episodes to prevent double-counting, plus QA checks and confidence tiers so you don’t make budget decisions on broken measurement.

A simple micro-example: a patient clicks a Google Ad, calls, then fills out a form later that day. In the episode ledger, that’s one episode with multiple events—not three separate “leads.”

Who This Is For

| • |

Owners/partners who want decision-ready ROI tied to net collections, not platform metrics.

|

| • |

Office managers who need a repeatable month-end close and fewer reporting disputes.

|

| • |

Marketing leads/agencies who want consistent taxonomy, QA evidence, and clean handoff diagnostics.

|

| • |

Front desk leads who want dispositions to drive process improvements (availability, routing, follow-up), not blame. |

Key takeaway: this is designed to help a real team align—not to “prove” one channel is the winner.

TL;DR

| • |

Lock definitions monthly - Stop moving goalposts by publishing a Definitions Charter and versioning changes with effective dates.

|

| • |

Use lead episodes - One person should not become three leads; episodes prevent double-counting.

|

| • |

Report the ladder - Qualified → scheduled → shown → started → net collections is the operational truth most teams can align on.

|

| • |

Add confidence tiers - Low-confidence data triggers fixes, not budget cuts.

|

| • |

Overlay constraints - Availability, answer rate, and speed-to-lead explain outcomes without turning reporting into blame.

|

| • |

Close the month consistently - Lock costs, roll cohorts forward, apply adjustments, and annotate changes. |

Key takeaway: the goal is stable decisions, not perfect attribution.

Table of Contents

What You Need to Start

Most ROI systems fail because they start with dashboards instead of inputs. Before you trust any chart, confirm you can capture inquiry events, stamp outcomes, and pull net collections inside a defined window.

| • |

Systems - A way to capture calls, web forms, and at least one phone-first or offline pathway into a lead record.

|

| • |

Attribution fields - Channel and source labels that persist beyond the first click or call.

|

| • |

Outcome stamping - A consistent pick-list that marks scheduled, shown, and started.

|

| • |

Collections access - A monthly process to pull net collections inside a fixed window (not just production).

|

| • |

Ownership - One person accountable for definitions and one person accountable for stamping completeness, QA, and reconciliation. |

A practical framing that reduces conflict: Analytics platforms are diagnostic; the episode ledger is the decision layer.

Minimum required fields for the episode ledger

Keep required fields minimal so completion stays high.

| • |

Identifiers - episode_id, event_timestamp, event_type (call, form, text, chat, walk_in, map_call, offline).

|

| • |

Attribution - channel, source, service_line, location.

|

| • |

Contactability - At least one of phone or email, or a documented “unidentified” flag for blocked/anonymous contacts.

|

| • |

Outcomes - disposition (from the pick-list), scheduled_date when scheduled, shown_flag when arrived, started_flag when your started rule is met.

|

| • |

Reconciliation keys - patient_id when known, appointment_id when scheduled in the PMS (practice management system). |

Key takeaway: if your team can’t fill these consistently, fix the workflow before you “fix the dashboard.”

> Back to Table of Contents

Quick Start: The Canonical 7-Step Process

Use this if you want a direct path without reading everything first.

| 1. |

Publish a Definitions Charter v1.0 and lock it for the month.

|

| 2. |

Capture channel/source for every lead event (calls, forms, texts, chats, walk-ins, map calls, offline).

|

| 3. |

Create an episode ledger with minimal required fields and a clear dedup rule.

|

| 4. |

Standardize outcomes and dispositions so scheduled, shown, and started are stamped consistently.

|

| 5. |

Segment by service line and location to prevent case mix disputes and false conclusions.

|

| 6. |

Run QA scripts on a cadence and label confidence tiers.

|

| 7. |

Run a month-end close packet and publish a one-page partner report with constraints and action owners. |

Key takeaway: a defensible minimum viable system is achievable quickly when definitions and stamping come first.

> Back to Table of Contents

One-Page Partner Report and Meeting Rules

If ROI meetings drift into “marketing vs front desk,” the issue is usually meeting structure plus missing confidence and constraints language.

One-page layout that reduces conflict

| • |

Header - Month, window definitions, confidence tier, and any annotated changes with effective dates.

|

| • |

By channel/source - Spend, qualified leads, scheduled, shown, started, net collections, ROI, CPL, CAC, payback status by policy.

|

| • |

Constraints first - Availability (days-to-next-available), answer rate, speed-to-lead, top disposition reasons.

|

| • |

Integrity notes - QA results, Direct/Unassigned shifts, spam spikes, routing failures, unresolved conflicts with an owner and due date.

|

| • |

Actions - A short list of fixes/tests with owners and a review cadence. |

A simple meeting rule helps: Start with confidence tier and constraints, then review ladder outcomes, then approve actions, and only then consider spend changes.

Constraints metrics: quick definitions you can standardize

These three definitions remove a lot of “we’re arguing about different math” friction.

| • |

Answer rate - Answered calls ÷ total inbound calls (count missed + abandoned as not answered). Track by hour and by location to surface staffing constraints.

|

| • |

Speed-to-lead - Median minutes from first inquiry event to first two-way staff response (call answered, call-back connected, or text/email reply). Track the median, not only the average, to avoid outliers masking reality.

|

| • |

Days-to-next-available - Days between inquiry date and the next available appointment slot for that service line/provider (report separately for emergency vs consult vs hygiene). |

Key takeaway: constraints metrics let you say “marketing is working, operations is constrained” without blaming anyone.

Branded search inflation

Branded search often captures demand created earlier by other channels. To reduce conflict, separate branded and non-branded paid search in reporting, show first-touch and last-touch views for interpretation, and keep accounting strict by crediting net collections once per episode.

Key takeaway: branded performance is often a demand-capture and access check, not proof that upper-funnel channels are unnecessary.

> Back to Table of Contents

Definitions Charter v1.0 (Copy/Paste Template)

A Definitions Charter stops the “what counts?” argument before it starts. Keep it short. Version it. Change it only with an effective date and a comparability note.

A practical conflict reducer: Marketing ROI “new patient” does not have to match billing “new patient.” Choose one rule, document it, map it to PMS fields, and apply it consistently.

Template note: The fields below are meant to be customized. If you’re not sure what to choose yet, use the “Example defaults” immediately below as a starting policy and calibrate after 60–90 days of stable measurement.

Copy/paste charter text (edit to match your practice)

| • |

Lead - Any inquiry event that creates or updates an episode (call, form, text, chat, walk-in, map call, offline).

|

| • |

Qualified lead - Meets intent + contactability rules for that service line.

|

| • |

Episode window - ___ days; repeat contacts inside the window attach to the same episode unless the intent-change rule triggers a new episode.

|

| • |

Intent-change rule - New episode is created when service_line changes from: ___ (list your “new intent” service lines), or when the episode window expires.

|

| • |

New patient - Choose one policy: 24 months or 36 months since last visit (marketing definition).

|

| • |

Scheduled - Appointment created in PMS or scheduling system with date/time recorded.

|

| • |

Shown - Patient arrived for the appointment (define late policy).

|

| • |

Started - Service-line milestone (document one per service line).

|

| • |

Net collections window - Cash received within ___ days of start date (net of refunds/chargebacks), using payment date.

|

| • |

Cost rules - Total marketing cost includes: ___ (list included costs) and is allocated by: ___ (rule).

|

| • |

Confidence tier - High/medium/low based on stamping completeness and QA pass rate thresholds.

|

| • |

Change control - Changes require an effective date label and a forward-only or backcast rule. |

Key takeaway: the charter is short by design—long charters don’t get used.

Example defaults (so the template doesn’t feel unfinished)

Here is one common “starter policy” many practices can calibrate after 60–90 days of stable measurement:

- Episode window: 60 days.

- New patient: 24 months since last visit (marketing definition).

- Net collections window: 90 days from start date, payment date basis, net of refunds/chargebacks.

- Started: emergency = completed visit; general NP = first paid visit; implants/cosmetic = accepted treatment plan plus initial production (or your chosen milestone).

- Cost rule: include media + required management + tracking tools; allocate shared tools evenly unless volumes justify starts-based allocation.

Key takeaway: publishing a filled example prevents “template skepticism” while keeping the charter copyable.

> Back to Table of Contents

What Counts as a Lead (and What Doesn’t)

Most teams don’t argue about ROI because they hate math. They argue because they’re counting different things.

A lead should be any inquiry event that creates or updates an episode with acquisition intent. Everything else is either noise, service, or recruiting.

Include

| • |

New-patient calls, forms, texts, and chats with care-seeking intent

|

| • |

Emergency calls (even if the visit happens same-day)

|

| • |

Map calls and call-only ad calls that never hit the website

|

| • |

Directory/marketplace inquiries that result in a two-way exchange

|

| • |

Offline responses that reach your office (postcard, billboard, radio), when you can label the placement |

Exclude (or route to a non-acquisition bucket)

| • |

Existing patient hygiene recall scheduling (unless your policy treats reactivation as its own channel/service line)

|

| • |

Billing/insurance benefit verification calls that are not acquisition intent

|

| • |

Job seekers, vendor solicitations, and non-patient spam

|

| • |

Wrong numbers and robocalls (track separately as spam/noise so CPL doesn’t lie) |

Key takeaway: clear include/exclude rules reduce staff frustration and prevent “lead inflation.”

> Back to Table of Contents

New Patients vs Starts (and Why They Differ)

“New patient” is a status; “started” is an outcome milestone. They are related, but they are not interchangeable.

| • |

New patient - A definition based on history (for example, no visit in 24 months). It often depends on how your PMS flags patient status and how you handle reactivation.

|

| • |

Started - A service-line milestone you choose for ROI consistency (for example: emergency visit completed, general NP first paid visit, implants accepted plan + initial production).

|

| • |

Why “started” is a better ROI anchor - It ties directly to operational conversion and to collections windows. It also avoids confusion when a “new patient” schedules but never shows. |

Key takeaway: map new patient definitions to PMS fields, but anchor ROI to “started” milestones so the ladder stays operational.

> Back to Table of Contents

Channel and Source Taxonomy: Canonical List, Mapping Rules, and UTM Logic

Channel and source are only useful if they’re stable and enforced. Most disputes come from naming drift, mixed capitalization, and “new labels” invented mid-month.

This is where many Direct/Unassigned disagreements start: inconsistent taxonomy turns fixable measurement problems into interpretation fights.

Canonical taxonomy list v1.0 (example channels and sources)

Keep the channel list small. Put detail in the source list.

| • |

paid_search - google_ads, bing_ads, google_ads_call (call-only/search call assets), google_lsa (Local Services Ads)

|

| • |

paid_social - meta, tiktok, common in some practices: linkedin

|

| • |

gbp - google_business_profile

|

| • |

organic_search - google_organic, bing_organic

|

| • |

email_sms - practice_email, practice_sms, common in some practices: recall_vendor

|

| • |

directories

- healthgrades, yelp, zocdoc_marketplace, insurance_directory

|

| • |

referrals - patient_referral, dentist_referral, common in some practices: employer_union

|

| • |

offline - postcard, billboard, radio, community_event

|

| • |

direct_unassigned - direct, unassigned (used for diagnosis, not as a “strategy channel”) |

Key takeaway: canonical lists reduce drift and make QA objective.

Channel vs source vs medium vs campaign (quick definitions)

| • |

Channel - The category used for decision-making (paid_search, paid_social, gbp, organic_search, referrals, offline).

|

| • |

Source - The origin label inside that channel (google_ads, meta, google_business_profile, healthgrades).

|

| • |

Medium - The transport type used in UTMs (cpc, paid_social, email, sms, qr, gbp).

|

| • |

Campaign - The named initiative (brand_search, implants_q4, new_patient_offer). |

Key takeaway: you can change campaign names frequently; you should not change channel/source definitions frequently.

Practical taxonomy rules

| • |

Keep values few and stable - Use a small, controlled channel list and enforce lowercase to prevent drift.

|

| • |

Separate channel from source - Channel is the category; source is the origin label.

|

| • |

Always tag service line and location - Prevent case-mix and capacity shifts from masquerading as channel performance.

|

| • |

Store first-touch and last-touch - Keep timestamps for interpretation, but credit net collections once per episode.

|

| • |

Pick a medium convention and lock it - For example, you can use utm_medium=gbp for GBP website clicks in your episode ledger mapping, even if GA4’s default channel grouping does not recognize “gbp” without a custom channel group. |

Key takeaway: taxonomy is governance, not a preference.

Default mapping rules (UTM → channel/source)

These rules keep the episode ledger consistent even when tools classify differently.

| • |

Paid search - If utm_medium=cpc and utm_source is google_ads or bing_ads, map to channel paid_search and source utm_source.

|

| • |

Paid social - If utm_medium=paid_social, map to channel paid_social and source based on utm_source.

|

| • |

GBP - If utm_source=google_business_profile (or your chosen label), map to channel gbp in the episode ledger even if GA4 groups it differently by default.

|

| • |

Email/SMS - If utm_medium=email or utm_medium=sms, map to channel email_sms.

|

| • |

Offline QR/vanity - If utm_medium=qr or utm_medium=vanity, map to channel offline and source to the placement label (postcard, billboard, radio). |

Key takeaway: mapping rules reduce “interpretation drift” across staff and vendors.

UTM examples people can copy

| • |

Paid search - ?utm_source=google_ads&utm_medium=cpc&utm_campaign=implants_q4&utm_content=adgroup_a |

| • |

Paid social - ?utm_source=meta&utm_medium=paid_social&utm_campaign=new_patient_offer&utm_content=video_1 |

| • |

GBP website link - ?utm_source=google_business_profile&utm_medium=gbp&utm_campaign=gbp_website |

| • |

Email - ?utm_source=practice_email&utm_medium=email&utm_campaign=recall_reactivation&utm_content=button |

| • |

SMS - ?utm_source=practice_sms&utm_medium=sms&utm_campaign=recall_reactivation&utm_content=link |

| • |

Offline QR - ?utm_source=postcard&utm_medium=qr&utm_campaign=summer_special&utm_content=qr_front |

Key takeaway: copy/paste examples reduce staff improvisation and make QA faster.

Directory and marketplace sources (how to map them)

Patients discover practices via directories, review sites, and marketplace-style listings. Treat these as sources you can name consistently.

| • |

Recommended approach - Keep channel as directories (or referrals, if that’s your policy), and set source to the specific directory (healthgrades, yelp, zocdoc_marketplace, insurance_directory).

|

| • |

Why it matters - These sources behave differently than ads and SEO; separating them reduces “SEO vs ads” arguments and helps cost allocation.

|

| • |

Workflow guardrail - Use a controlled intake pick-list so staff do not type “HG,” “health grades,” or “that review site.” |

Key takeaway: directory traffic is real demand; it’s also easy to misclassify unless you standardize.

UTM QA checklist for every campaign launch

| • |

Confirm UTMs survive redirects - Check the final landing URL after all redirects.

|

| • |

Test scheduler/form handoffs - Verify UTMs are not stripped before the episode ledger captures them.

|

| • |

Run a test form and test call - Confirm channel/source populate correctly end-to-end.

|

| • |

Validate allowed values - Lowercase and no “new” labels without governance approval.

|

| • |

Confirm spam controls - Strong enough to reduce noise without blocking legitimate patients. |

Key takeaway: QA at launch prevents weeks of corrupted reporting.

Tracking referrals and word-of-mouth without free-text drift

Use a controlled intake question and a controlled pick-list. Keep referral values standardized and avoid free-text notes that create taxonomy drift. If an episode also has ad clicks or tracked web paths, credit net collections once per episode and use first-touch/last-touch for interpretation, not double-counting.

Key takeaway: referral tracking succeeds when it is easy to select and hard to improvise.

> Back to Table of Contents

Local Services Ads (LSA) + Google Guaranteed (Taxonomy + Measurement Notes)

Local Services Ads (often associated with “Google Guaranteed”) are common in dentistry and deserve a stable place in your taxonomy so they don’t get lumped into “Google” and cause reporting fights.

| • |

Taxonomy recommendation - Keep channel as paid_search and set source to google_lsa (or your chosen locked label).

|

| • |

How leads arrive - Many LSA leads are phone-first and may never hit your website, so rely on call tracking + the episode ledger, not GA4 sessions.

|

| • |

Workflow guardrail - Treat LSA calls like any other call: create/update an episode, apply dispositions, and stamp ladder outcomes consistently. |

Key takeaway: if LSA is popular in your market, labeling it cleanly prevents “Google wins everything” disputes.

> Back to Table of Contents

How to Separate Brand vs Nonbrand in Google Ads Reporting

Branded search can look “amazing” even when it’s mostly capturing demand created by other channels. Nonbrand can look “bad” even when it’s building pipeline that closes later. Separating brand and nonbrand prevents both misreads.

A simple naming convention that sticks

| • |

Brand - Campaign names start with brand__ (example: brand__search__general).

|

| • |

Nonbrand - Campaign names start with nonbrand__ (example: nonbrand__search__implants).

|

| • |

Everything-else bucket - PMax/other automation stays in pmax__ (or other__), so partner views stay comparable month to month. |

Key takeaway: naming conventions are governance; they prevent “we reorganized and lost comparability.”

Query rules (simple and defensible)

| • |

Brand queries - Your practice name, provider names, and branded variations/misspellings.

|

| • |

Nonbrand queries - Service + city, symptom + dentist, “near me” queries, and competitor conquest if you run it.

|

| • |

Gray zone - “Best dentist” queries can behave like brand for strong local brands; keep them in nonbrand unless you document a different rule. |

Key takeaway: write the rules down once and stop renegotiating them every meeting.

What to do when brand looks amazing but nonbrand collapses

| • |

Check access first - If days-to-next-available is high or answer rate is low, nonbrand may be generating demand you can’t capture.

|

| • |

Watch brand CPC/CPA trends - Spikes can signal competitors bidding on your name or local visibility changes; brand “performance” may just be a defense cost.

|

| • |

Use a partner-safe view - Show brand, nonbrand, and pmax separately in the one-page report, but credit net collections once per episode so you don’t double-count. |

Key takeaway: splitting brand vs nonbrand reduces conflict and leads to better decisions than “Google is great” as a blanket conclusion.

> Back to Table of Contents

Auto-Tagging vs UTMs: The Rule of Record (With a Concrete Example)

Auto-tagging typically means platforms attach click identifiers for their own measurement and optimization. UTMs are human-readable labels that help keep a stable taxonomy across tools.

Rule of record: the episode ledger uses your locked taxonomy for decision-making; platform reports and GA4 are directional for diagnosis and optimization.

Concrete example: why the numbers disagree

A common scenario looks like this:

- Google Ads shows conversions because it’s attributing within its own model and identifiers.

- GA4 shows higher Direct/Unassigned because a scheduler redirect stripped parameters, an embedded experience didn’t pass context, or consent choices reduced observable signals.

- The episode ledger shows fewer, more defensible starts because it merges multi-touch events into one episode and stamps outcomes consistently.

In that scenario, the practical move is to fix the handoff and QA the pipeline. Then reconcile to starts and net collections.

Key takeaway: disagreements shrink when you define one decision layer and treat everything else as diagnostic context.

> Back to Table of Contents

ROI Formulas, Calculator, CPL, and “What’s a Good Dental Marketing ROI?”

The core formulas

| • |

ROI - (net collections − total marketing cost) ÷ total marketing cost.

|

| • |

ROAS (return on ad spend) - net collections ÷ ad spend.

|

| • |

CAC (customer acquisition cost) - total marketing cost ÷ starts.

|

| • |

CPL (cost per lead) - total marketing cost ÷ total leads (inquiries).

|

| • |

CPQL (cost per qualified lead) - total marketing cost ÷ qualified leads.

|

| • |

Payback status - whether cumulative net collections have met/exceeded cost yet (timing matters). |

Plain language: ROI answers “did we earn more than we spent,” while ROAS answers “how much came back per ad dollar.” CPL and CPQL help diagnose lead quality before you blame front desk conversion.

Why collections beats production for ROI decisions

Production can be useful operationally, but it often misleads ROI discussions because it can include amounts that are never collected, timing that doesn’t match cash reality, and assumptions that differ by payer mix. Collections-based ROI is harder, but it’s more stable for decision-making because it reflects what actually came in. The key isn’t perfection—it’s consistency: define your net collections window, apply it the same way each month, and annotate exceptions.

A simple calculator structure you can replicate in any spreadsheet

Inputs (per channel/source): total marketing cost, ad spend (optional), leads, qualified leads, scheduled, shown, started, net collections (in your window), refunds/chargebacks (so “net” stays net).

Outputs: ROI, ROAS, CAC, CPL, CPQL, and payback status by policy.

Spreadsheet column template (copy/paste structure)

- month

- channel

- source

- location

- service_line

- ad_spend

- non_media_cost

- total_marketing_cost

- leads

- qualified

- scheduled

- shown

- started

- net_collections

- refunds_chargebacks

- roi

- roas

- cac

- cpl

- cpql

- payback_status

Key takeaway: using a literal column structure prevents “same numbers, different spreadsheets” disputes.

Filled example rows (so readers can visualize the template)

Below is the same structure filled for two channels (illustrative only):

- 2026-01 | paid_search | google_ads | location_a | general_np | ad_spend=4000 | non_media_cost=1000 | total=5000 | leads=40 | qualified=25 | scheduled=14 | shown=11 | started=8 | net=12000 | refunds=0 | roi=1.40 | roas=3.00 | cac=625 | cpl=125 | cpql=200 | payback=paid

- 2026-01 | gbp | google_business_profile | location_a | emergency | ad_spend=0 | non_media_cost=400 | total=400 | leads=18 | qualified=14 | scheduled=10 | shown=9 | started=9 | net=5400 | refunds=0 | roi=12.50 | roas=n/a | cac=44 | cpl=22 | cpql=29 | payback=paid

Key takeaway: a filled example makes the spreadsheet intent real and reduces “how do I set this up?” friction.

What costs count in dental marketing ROI?

Consistency matters more than the “perfect” list. Most practices include media spend, management/retainers tied to acquisition, required creative, and tracking tools used for acquisition. Shared tools should be allocated with a documented rule (by starts, by qualified leads, or evenly) and kept consistent.

What’s a good dental marketing ROI?

There isn’t one number that’s “good” for every practice because ROI depends on your case mix, payer mix, margin, capacity, and how fast you can schedule and start patients. A safer way to answer the question is to use four checks together:

| • |

Break-even CAC - Set a target based on net collections per start × contribution margin (then add a buffer).

|

| • |

Payback window - Decide how quickly you need the channel to pay back based on cash flow and scheduling reality, then track cumulative collections vs cost over time.

|

| • |

Confidence tier - A “great ROI month” with low confidence should trigger fixes before spend changes.

|

| • |

Capacity constraints - If days-to-next-available is high or answer rate is low, ROI may reflect access limits, not channel quality. |

Key takeaway: a “good ROI” is one that clears your break-even and payback policies with high confidence and acceptable access.

A concrete break-even CAC example (no industry benchmarks required)

If your average net collections per start (inside your window) is $1,800 and your conservative contribution margin is 60%, then:

- Break-even CAC ≈ 1,800 × 0.60 = $1,080

A practical internal target might be 15–25% below break-even to account for confidence tier, seasonality, refunds/chargebacks, and capacity constraints. For example, 20% below break-even would be ~$864.

Key takeaway: “good ROI” starts with your own break-even math, not someone else’s benchmark.

How to allocate SEO, website, and retainers without distorting ROI

When costs aren’t tied to a single channel, you have three defensible options—pick one and stay consistent.

| • |

Allocate to an “organic/brand” bucket - Treat SEO/website/retainers as their own channel cost line and evaluate separately with longer horizons.

|

| • |

Allocate across channels - Spread shared costs across channels by qualified leads or starts (and document the rule).

|

| • |

Keep shared costs separate in the partner report - Show performance by channel using media-only cost for optimization, and show total ROI using fully-loaded cost for leadership decisions. |

Key takeaway: the best allocation rule is the one you can repeat every month without arguing.

> Back to Table of Contents

Attribution Windows, Cohort Horizons, and LTV Context

You can report short-horizon ROI and longer-horizon value without double-counting if you use cohorts and cumulative roll-forward.

How to choose an attribution window (practical checklist)

| • |

Start from the patient journey - Typical days-to-schedule, days-to-start, and payment lag by service line.

|

| • |

Match the decision to the window - Short windows for weekly optimizations; longer windows for service lines with longer consult-to-start cycles.

|

| • |

Keep windows policy-based - Pick a starting policy, then calibrate after 60–90 days of stable measurement.

|

| • |

Protect low-volume services - Extend the evaluation window or require minimum sample size before budget cuts. |

Key takeaway: windows should reflect reality, not reporting convenience.

Service-line starting points to calibrate (not universal rules)

| • |

Emergency - Often shorter windows because intent-to-visit is fast and collections post quickly.

|

| • |

General new patient - Often a mid-length window to capture first visit plus early follow-up.

|

| • |

Implants/cosmetic/complex care - Often longer windows because consult-to-start and payment timing can be slower and multi-visit. |

Key takeaway: label these as starting points, then calibrate to your real scheduling and collections timing.

90-day ROI vs 12-month value (LTV context)

A 90-day view supports fast decisions and flags constraint issues quickly. A 12-month view helps avoid under-valuing channels that drive patients who expand into additional services later. The governance rule is the same: collections are credited once per episode, and longer-horizon value is reported as cumulative roll-forward by cohort, not as a second “new ROI” claim.

Key takeaway: use LTV context to prevent under-investing in demand creation, without double-counting revenue.

> Back to Table of Contents

How to Pull Net Collections (Tool-Agnostic)

Net collections are the most common numerator dispute. A defensible system treats net collections as cash received within a defined window, net of refunds/chargebacks, using a consistent pull method.

Production vs collections vs adjustments vs refunds (quick clarity)

Production is what was billed or produced clinically. Collections is what was actually received. Adjustments and write-offs change what might be collectible, but they don’t guarantee cash timing. Refunds and chargebacks are cash going back out and should reduce “net” regardless of whether they occur in a later month (your policy should say how you handle out-of-window refunds so comparisons remain fair).

Common report labels to look for in most PMS/accounting setups

You don’t need the exact same report names across systems, but you do need the same concept: cash received by payment date.

- “Payments received” or “Receipts” by payment date

- “Deposit report” / “Daily deposits” rolled up to month

- “Transactions by payment date” (sometimes split by payment type)

- “Payment summary” that includes patient payments and insurance payments

- “Refunds” / “Reversals” / “Chargebacks” report (so net stays net)

Key takeaway: you’re looking for cash-in and cash-out by date, not procedure totals.

Pull checklist

| • |

Use cash received - Base on payment date, not procedure date.

|

| • |

Document posting timing - Insurance timing affects payback interpretation, especially for mixed payer models.

|

| • |

Apply refunds/chargebacks - Reductions should reduce net; document how out-of-window adjustments are handled.

|

| • |

Define membership policy - Decide how membership receipts are credited and whether hygiene is separated.

|

| • |

Handle financing timing - Credit collections when funds are received; annotate timing shifts to avoid misreads.

|

| • |

Reconcile monthly - Keep a brief note explaining any material differences and why they occurred. |

Key takeaway: stable numerator rules make partner meetings shorter and more productive.

Worked example: payment date vs procedure date

If a crown is delivered in Month 1 but the insurance payment posts in Month 2, a procedure-date report can make Month 1 look artificially strong and Month 2 look artificially weak. A payment-date net collections pull smooths that distortion and makes payback interpretation more honest. You can still track production operationally—just keep ROI tied to collections by policy.

Mini reconciliation checklist

| • |

Step 1 - Pull total payments received for the month (cash received).

|

| • |

Step 2 - Subtract refunds/chargebacks to arrive at “net.”

|

| • |

Step 3 - Apply your episode attribution window to assign net collections to cohorts (don’t double-count late posts).

|

| • |

Step 4 - Spot-check a small sample from episode → patient → payments to confirm mapping integrity.

|

| • |

Step 5 - Record exceptions in the close packet (timing, write-offs policy changes, fee schedule changes). |

Key takeaway: reconciliation is what turns “numbers” into defensible reporting.

> Back to Table of Contents

Lead Episodes and PMS Matching (With a Concrete Episode Window Example)

Lead episodes prevent double-counting and make real behavior reportable: multiple touchpoints, shared phone numbers, blocked caller IDs, cross-device conversion, and multi-location crossover.

A concrete episode window policy example (edit to fit your practice)

Here’s a common starting policy that’s easy to explain and enforce:

- Episode window: 60 days.

- If the same person contacts you again within 60 days, attach it to the same episode.

- Open a new episode if the 60-day window expires, or if intent changes by policy (example: hygiene recall inquiry later becomes an implant consult).

- For repeat “price shoppers” who contact multiple times but never schedule, keep the same episode within the window so you don’t inflate lead volume and distort CPL.

Key takeaway: the point of the window is comparability—not catching every edge case perfectly.

How to implement episodes that reconcile

| 1. |

Choose an episode window by intent and document it.

|

| 2. |

Define identity keys and a merge hierarchy (phone + name, then email, then appointment ID).

|

| 3. |

Normalize all inbound events into one episode ledger (call, form, text, chat, walk-in, map call, offline).

|

| 4. |

Link episodes to the PMS with stable keys and an audit trail.

|

| 5. |

Store first-touch/last-touch with timestamps, but credit net collections once per episode.

|

| 6. |

Define an intent-change rule that starts a new episode (example: hygiene recall vs implant consult). |

Key takeaway: episodes don’t make attribution perfect; they make accounting consistent and disputes resolvable.

Common PMS matching keys and pitfalls (tool-agnostic)

| • |

Appointment ID - Useful when the appointment is created from a tracked workflow; less useful when scheduling happens outside the episode flow or IDs change on reschedule.

|

| • |

Patient ID / chart number - Best once the patient is created; won’t exist at first contact.

|

| • |

Phone/email - Great early, but watch for family-shared numbers, blocked caller ID, and placeholder emails.

|

| • |

“New patient” flags - Often inconsistent across teams; treat them as inputs to governance, not automatic truth. |

Key takeaway: matching works best when you prioritize auditability over perfection.

> Back to Table of Contents

Qualified Leads and Dispositions: Starter Thresholds, Training, and a Disposition Pick-List v1.0

Qualified leads should be defined in a way that’s easy to apply uniformly across channels. Dispositions should describe outcomes and constraints, not people.

Starter thresholds to calibrate (not universal rules)

| • |

Emergency-intent calls - Often qualify around 30–60 seconds when intent is clear and follow-up is possible.

|

| • |

General new-patient calls - Often qualify around 60–90 seconds when care-seeking intent is clear and scheduling is plausible.

|

| • |

Elective consult calls - Often qualify around 90–120 seconds because intent discussion takes longer and price shopping is common.

|

| • |

Text/chat - Treat as qualified after a two-way exchange confirms intent plus at least one reliable follow-up method. |

Key takeaway: thresholds are starting points; calibrate to your baseline and service mix.

Training reality: what makes it stick

Put the disposition picker where the work already happens (PMS note workflow, intake tool, or episode ledger UI). Make stamping required the same day, with an end-of-day sweep for anything still unknown. Reinforce the purpose: dispositions reveal constraints leadership can remove.

Disposition pick-list v1.0 (copy/paste starter set)

Use a controlled list like this to reduce free-text drift. Each disposition should be neutral and action-oriented.

| • |

scheduled - Appointment created; the ladder outcome is scheduled (still track shown/started later).

|

| • |

could_not_reach - You attempted contact but did not connect (track attempts separately if possible).

|

| • |

after_hours_missed - Lead arrived after hours and no two-way exchange occurred within your SLA.

|

| • |

no_availability - Patient wanted care but you could not offer a reasonable appointment window.

|

| • |

price_shopping - Inquiry focused on price only and did not proceed despite follow-up (keep within the same episode window to avoid inflation).

|

| • |

insurance_not_accepted - Payer fit prevented scheduling; useful for access/fit strategy, not blame.

|

| • |

existing_patient_service - Not acquisition intent (hygiene recall, routine reschedule) unless your policy treats reactivation separately.

|

| • |

spam_or_wrong_number - Noise that should not count toward qualification; report separately so CPL doesn’t lie.

|

| • |

not_interested - Patient declined after two-way exchange (capture the most common reason if you have a controlled sub-reason list).

|

| • |

referred_out - Patient needed a service you do not provide; track separately to inform service-line strategy.

|

| • |

duplicate_episode - Same person/same intent inside the episode window; merged into the existing episode.

|

| • |

other_documented - Use sparingly; require a brief controlled reason (not free text) if possible. |

Key takeaway: dispositions are a shared language for constraints and workflow improvements—not a scorecard for staff.

Spam handling mini playbook

| • |

Watch for spikes - Sudden lead increases with no spend change often signal noise.

|

| • |

Separate spam from unreachable - Don’t mix spam_or_wrong_number with could_not_reach.

|

| • |

Report spam alongside qualified volume - Keep denominators honest for CPL and CAC.

|

| • |

Fix upstream - Validation, rate limiting, placement exclusions, and targeting corrections are usually higher leverage than arguing about attribution. |

Key takeaway: clean dispositions protect morale and protect decision quality.

> Back to Table of Contents

GA4 Handoffs: Cross-Domain and Unwanted Referrals

This section covers a common measurement failure: scheduler, portal, and payment handoffs that erase attribution.

Path in GA4: Admin → Data streams → Web → Configure tag settings → Configure your domains

How cross-domain works and how to verify it

Cross-domain measurement passes identifiers via a linker parameter that often appears as _gl during testing. In reports, that value can be redacted, so focus on continuity rather than “seeing the exact string.” Verification should confirm source/medium does not flip after the handoff.

| • |

Run a tagged click test - Click a tagged URL, complete the handoff, and confirm continuity post-handoff.

|

| • |

Check for parameter stripping - Redirect chains and embedded experiences can drop parameters.

|

| • |

Verify continuity - Confirm source/medium does not reset to the scheduler/payment domain after the handoff. |

Key takeaway: treat GA4 as a handoff diagnostic, not the accounting ledger.

Unwanted referrals (GA4) and why it matters

Unwanted referrals reduce attribution resets during handoffs by preventing certain third-party domains from taking referral credit and resetting session context. It does not fix every handoff problem—redirects, iframes, and parameter stripping can still break continuity—but it removes one common source of “false referrals.”

Key takeaway: unwanted referrals is a stabilizer, not a universal handoff cure.

Verify-it checklist: where to look in GA4 and what “broken” looks like

This is the fastest way to separate “demand changed” from “handoff broke.”

| • |

Traffic acquisition - Check Session source/medium around handoff-heavy journeys. A sudden shift to the scheduler/payment domain as a referral is a red flag.

|

| • |

Referrals - If the scheduler domain spikes as a referrer after a change, suspect unwanted referrals misconfiguration or a handoff reset.

|

| • |

Landing pages - If tagged campaigns are spending but landing-page traffic looks untagged, suspect redirects or tag loss before the landing page fully loads.

|

| • |

Time alignment - If the break starts the same day as a scheduler/redirect/consent change, treat it as a measurement defect until proven otherwise.

|

| • |

Reality check - Cross-check the episode ledger: if episode volume is stable but GA4 attribution shifts, GA4 classification changed—not your true demand. |

Key takeaway: verify continuity and patterns, not perfect labels.

> Back to Table of Contents

How to Track Online Scheduling Conversions (Scheduler Recipe)

Many dental scheduling tools live on a different domain or embed in ways that can strip attribution. The safest approach is to treat the scheduler as a handoff you QA, not a black box you assume is “tracking fine.”

What counts as a scheduling conversion

Decide what you count, and count it once.

| • |

Appointment requested - Patient submits a request but it is not yet confirmed (high risk of double-counting).

|

| • |

Appointment confirmed - The scheduling system confirms a slot; still validate it maps into the PMS.

|

| • |

Appointment created in PMS - The safest operational definition because it matches what the team works from.

|

| • |

Deposit collected - Common for some service lines; define whether deposit is part of “started” or only a scheduling milestone. |

Key takeaway: “created in PMS” is often the cleanest conversion definition for consistent reporting.

A stand-alone scheduler tracking checklist

| 1. |

Use the same GA4 property across the website and the scheduler domain if you control both; if not, prioritize capturing attribution into the episode ledger before the handoff.

|

| 2. |

Enable cross-domain measurement for the scheduler domain and verify the handoff keeps source/medium continuity (don’t just “look for _gl”).

|

| 3. |

Add the scheduler domain to unwanted referrals to reduce referral resets when the scheduler would otherwise take credit.

|

| 4. |

Test three journeys: paid click → scheduler, GBP website click → scheduler, and direct → scheduler, then confirm the episode ledger captures channel/source correctly for each.

|

| 5. |

Confirm confirmation pages or “appointment created” signals are not double-counting due to reloads, back-button behavior, or embedded iframes.

|

| 6. |

If you cannot pass UTMs/click IDs into the scheduler, implement a fallback: capture attribution into the episode ledger at the moment of inquiry (call/form/chat) and treat scheduler events as outcomes, not sources.

|

| 7. |

Annotate scheduler changes in the change log and rerun these tests after updates. |

Key takeaway: if the scheduler handoff is unstable, capture attribution earlier and use the episode ledger as the truth layer.

> Back to Table of Contents

Direct/Unassigned Troubleshooting

Direct/Unassigned arguments usually happen when stakeholders treat these as “channels” rather than classification outcomes.

Definitions in plain language

Direct is a channel classification that can include true direct navigation plus missing-context scenarios. Unassigned means the session does not match channel grouping rules based on available parameters/signals. (not set) is a placeholder that typically indicates missing values for a dimension.

Top causes to check before budget decisions

| • |

Scheduler/portal handoffs - Parameters lost or attribution reset post-handoff.

|

| • |

Redirects stripping parameters - Tagging disappears between click and landing.

|

| • |

Consent settings - Reduced observability and modeled classification shifts.

|

| • |

Email/SMS link rewriting - Security scanners and link wrappers can alter referrers.

|

| • |

DNI pool reuse - Attribution bleed when pools are undersized or reused too quickly. |

A fast failure detector: if Direct/Unassigned shifts sharply right after a system change, assume measurement broke until QA proves otherwise.

Symptom → likely cause → fix (quick block)

| • |

Symptom: Scheduler domain suddenly appears as a top referrer → Likely cause: handoff reset → Fix: add unwanted referrals + verify cross-domain continuity.

|

| • |

Symptom: Paid spend steady, but tagged traffic drops and Direct rises → Likely cause: redirects stripping UTMs → Fix: trace final landing URL and remove/repair redirect chain.

|

| • |

Symptom: “Unknown source” website calls spike at peak hours → Likely cause: DNI pool undersized → Fix: increase pool and retest peak-hour attribution.

|

| • |

Symptom: Email/SMS performance flips to Direct/Unassigned → Likely cause: link rewriting or missing UTMs → Fix: enforce UTM rules and test from the actual send tool.

|

| • |

Symptom: Unassigned spikes after consent banner update → Likely cause: consent configuration changed observable signals → Fix: review consent settings, annotate change, and use the episode ledger as decision layer.

|

| • |

Symptom: Form events stable in GA4 but leads in ledger drop → Likely cause: ingestion/webhook failure → Fix: test a form end-to-end and monitor ingestion alerts. |

Key takeaway: symptom-based troubleshooting makes “Direct/Unassigned” a fixable ops issue instead of a recurring debate.

A fast QA sequence

| 1. |

Run controlled UTM click tests from two devices and verify UTMs persist into the episode ledger.

|

| 2. |

Place a test call from a tagged source and confirm channel/source is captured correctly.

|

| 3. |

Test scheduler handoff and confirm attribution does not flip to the scheduler domain.

|

| 4. |

Confirm unwanted referrals settings are correct for the handoff domains.

|

| 5. |

Check recent changes (redirects, consent configuration, link shorteners, SMS/email tools, call routing).

|

| 6. |

Segment reporting by change-log effective dates before declaring “demand changed.” |

Key takeaway: low confidence should trigger fixes and annotation, not budget whiplash.

> Back to Table of Contents

How to Track Phone Calls in GA4 (Step-by-Step)

GA4 can record call intent signals, but call outcomes (answered, qualified, scheduled) should be captured through call tracking and reconciled in the episode ledger.

In GA4, what used to be called conversions are now often labeled key events. If you use GA4 events as diagnostic signals, mark your chosen events consistently so your team isn’t lost in UI terminology.

Recommended event names (keep them consistent)

| • |

click_to_call - Tel-link clicks (GA4 diagnostic signal).

|

| • |

call_connected (optional) - If your call platform can send a webhook for answered calls into the episode ledger (keep this out of GA4 unless you’re certain it’s non-sensitive).

|

| • |

qualified_call (episode ledger) - A ladder outcome in your ledger, not a GA4 event. |

Key takeaway: GA4 events should signal intent; the ledger should record outcomes.

Path 1: Track click-to-call (tel:) clicks as a GA4 event

| 1. |

Choose one event name, such as click_to_call.

|

| 2. |

Implement in Google Tag Manager (GTM) or site tagging so it fires on mobile and desktop.

|

| 3. |

Use a link-click trigger type (commonly “Just Links”) targeting Click URL starts with tel:.

|

| 4. |

Avoid stacking multiple triggers that can double-fire (example: both “All Link Clicks” and a tel:-specific trigger).

|

| 5. |

Pass helpful parameters only when appropriate (page_path, location if number is location-specific) and avoid guessing service_line unless the page clearly indicates it.

|

| 6. |

Validate in GA4 DebugView, then confirm it appears in standard reporting once real traffic arrives.

|

| 7. |

QA periodically by clicking a tel: link on mobile, confirming one event fires per click, and verifying the episode ledger still captures the actual call outcome through call tracking. |

Key takeaway: GA4 can tell you “call intent happened,” not “what the call became.”

Most common double-fire causes (and how to prevent them)

| • |

Multiple GTM triggers - Two triggers listening to the same click → consolidate to one tel:-specific trigger.

|

| • |

Click + navigation events - A generic click event plus a custom event → keep one canonical event for tel: clicks.

|

| • |

SPA behavior - Single-page apps can fire multiple history changes → validate in DebugView and ensure one fire per click. |

Key takeaway: your acceptance test is simple—one click should produce one event.

Path 2: Use call tracking for outcomes, then tie to episodes

| 1. |

Ensure calls create or update an episode in your episode ledger.

|

| 2. |

Stamp outcomes with your dispositions and ladder steps (scheduled, shown, started).

|

| 3. |

Reconcile to the PMS using appointment IDs and patient IDs where available.

|

| 4. |

QA with test calls from different sources and verify source/medium and outcomes are captured end-to-end. |

Key takeaway: the episode ledger is what connects calls to ROI, not GA4 alone.

> Back to Table of Contents

How to Track Form Submissions in GA4 (Step-by-Step)

Many practices measure calls well but lose visibility on forms due to double-fires, missing success signals, or UTMs not being carried into the submission. GA4 can record form events, but attribution and outcomes should still reconcile through the episode ledger.

In GA4, what used to be called conversions are now often labeled key events. Use that language internally so “it disappeared” doesn’t become a support ticket.

Recommended event names (keep them consistent)

| • |

generate_lead - GA4 recommended event for successful lead generation (common for forms).

|

| • |

form_submit_success - A clear custom alternative if you want separation from other lead types.

|

| • |

lead_created (episode ledger) - A ledger-side marker that the record was ingested successfully (not a GA4 goal). |

Key takeaway: choose names once so your reporting stays comparable.

Path 1: Track a “success” event on form completion

| 1. |

Choose an event name policy: use generate_lead, or use a custom name like form_submit_success (pick one and stay consistent).

|

| 2. |

Trigger on a true success signal (thank-you page load, a “success” message in the DOM, or a custom dataLayer event fired by the form tool) instead of a generic “submit” click that can fire on errors.

|

| 3. |

In GTM, use a trigger type that matches your form reality: thank-you page view, custom event, or element visibility on a success message. Use “Form Submission” triggers cautiously because they can be noisy on modern sites.

|

| 4. |

Prevent double-fires by ensuring the success signal only happens once per submission (watch for reloads, back button, and SPA behavior).

|

| 5. |

Validate in GA4 DebugView with a real test submission and confirm exactly one event appears per completed form.

|

| 6. |

QA monthly by submitting a test form from a tagged URL and confirming the episode ledger receives the lead with the correct channel/source. |

Key takeaway: measure form completion, not form attempts.

Most common double-fire causes (and how to prevent them)

| • |

Submit click + success page - Both fire → keep only the success signal.

|

| • |

Multiple tags - GA4 event tag duplicated across containers/pages → audit GTM and remove duplicates.

|

| • |

Reload/back behavior - Thank-you page fires again → add safeguards (unique submission ID or one-time event gating). |

Key takeaway: if your forms double-fire, CPL and CPQL become fiction.

Path 2: Pass UTMs into hidden fields so the episode ledger has attribution

| 1. |

Capture UTMs and any click identifiers your policy allows at landing time (before the form).

|

| 2. |

Store them in first-party context (cookie/session storage) long enough to survive navigation to the form.

|

| 3. |

Populate hidden form fields with utm_source, utm_medium, utm_campaign (and your chosen channel/source mapping inputs).

|

| 4. |

Ensure the form submission sends those fields into your episode ledger or CRM layer, not only into GA4.

|

| 5. |

Run a UTM QA test on every campaign launch: tagged click → form submit → episode ledger shows correct channel/source. |

Key takeaway: GA4 events help diagnose; the episode ledger needs the attribution fields to be decision-ready.

Minimal QA checklist for forms

| • |

One submission = one event - Confirm no double-fires.

|

| • |

UTMs persist - Confirm UTMs are captured even if the user navigates before submitting.

|

| • |

Episode ledger receives the record - Confirm lead creation plus required fields.

|

| • |

Spam defenses - Confirm bot filtering reduces noise without blocking real patients. |

Key takeaway: form tracking is “simple” only after you’ve proved it doesn’t lie.

> Back to Table of Contents

Chat, Webchat, and AI Receptionist Tracking (What Counts as Qualified + QA)

Chat widgets and AI receptionist tools can generate real patients—or a lot of noise. The trick is to treat chat as a first-class lead event in the episode ledger, with the same governance rules as calls and forms.

What counts as a qualified chat lead

| • |

Two-way exchange - The patient and your team (or tool) exchange at least one meaningful message each (not just “hello”).

|

| • |

Intent confirmed - The message indicates care-seeking intent for a service line you provide.

|

| • |

Follow-up method - You capture a reliable callback method (phone or email) or a verifiable messaging thread that staff can follow up on.

|

| • |

Routing outcome - The chat results in a next step: scheduled, follow-up required, or a documented constraint (no availability, insurance not accepted, etc.). |

Key takeaway: if chat can’t be followed up, it’s not a qualified lead—treat it as an intent signal.

How to capture chat attribution without breaking governance

| • |

Capture UTMs at landing - Same rule as forms: store UTMs first, then attach them to the chat lead record when the chat starts or completes.

|

| • |

Create/update an episode - A chat should create an episode the same way a form does.

|

| • |

Avoid PHI/PII in transcripts - Keep chat content minimal and route sensitive clinical detail into controlled systems; treat transcripts like recordings from a compliance standpoint.

|

| • |

Stamp outcomes - Apply the same dispositions and ladder steps as other lead types. |

Key takeaway: chat belongs in the episode ledger, not as “misc website activity.”

QA checklist for chat leads

| • |

Test a tagged chat - Start a chat from a tagged URL and confirm channel/source populate in the episode ledger.

|

| • |

Check ingestion - Confirm chat leads don’t “die” in the widget without reaching your ledger/CRM.

|

| • |

Noise rate - Monitor spam_or_wrong_number and could_not_reach for chat; spikes usually mean bot traffic or misrouting.

|

| • |

Follow-up SLA - If chat replies are slow, treat it like speed-to-lead: the constraint is operational, not marketing. |

Key takeaway: chat is only valuable if it produces follow-upable episodes and consistent outcomes.

> Back to Table of Contents

GBP and Phone-First Measurement

Google Business Profile communication and reporting capabilities can vary by region and surface and have changed over time. Avoid building ROI processes that depend on native GBP communication logs as the sole lead ledger. Treat GBP metrics as directional, and reconcile calls/contacts in your episode ledger.

GBP calls vs website clicks vs driving directions (classification rules)

| • |

GBP call - Channel gbp; source google_business_profile; event_type map_call (or your chosen label).

|

| • |

GBP website click - Channel gbp; source google_business_profile; capture via tagged GBP website URL where possible.

|

| • |

GBP driving directions - Treat as a directional intent signal, not a lead outcome; store as an intent event if you track it, but don’t count it as qualified or scheduled without confirmation in the episode ledger. |

Key takeaway: keep GBP intent signals and actual lead outcomes separate so you don’t inflate ROI.

What GBP actions usually mean operationally

Calls often reflect “ready now” intent and can surface access constraints quickly (answer rate, after-hours coverage). Website clicks often indicate comparison behavior and can be improved with faster landing pages and clearer service-line paths. Direction requests often correlate with “nearby” intent but should be treated as context unless they result in a call, form, chat, or scheduled outcome.

Key takeaway: GBP actions are best used to diagnose demand capture and access, not to claim revenue directly.

Where GBP metrics live (high-level) and why they’re directional

GBP provides performance-style metrics that help you understand visibility and actions taken from the listing. Use them to spot changes in demand capture and listing health, but reconcile real outcomes through calls, episodes, and collections. Treat listing metrics as context, not as your lead ledger.

How to tag the GBP website URL

Use simple, consistent UTMs (example: utm_source=google_business_profile and utm_medium=gbp). Keep in mind GA4’s default channel grouping may not recognize “gbp” unless you build a custom channel group, so it may appear as Unassigned in GA4 even though your episode ledger correctly maps it to channel gbp.

Key takeaway: don’t panic if GA4 classifies it oddly—your ledger mapping is the decision layer.

Multi-location note for GBP tagging consistency

If you have multiple locations, use a consistent tagging pattern across all listings, and include location as a field in the episode ledger rather than inventing new source strings per office. This prevents taxonomy drift and makes location-level reporting comparable.

Key takeaway: GBP works best when you treat it as a consistent channel with location as a field, not as many one-off “sources.”

> Back to Table of Contents

Call Tracking, NAP, DNI Pool Sizing, and Call-Only Ads

Dynamic number insertion (DNI) is website call tracking that swaps numbers to attribute calls to traffic sources. NAP consistency refers to keeping your Name, Address, and Phone information consistent across listings and citations—important for local SEO and patient clarity.

DNI vs static numbers

| • |

DNI - Best for attributing website-driven calls; requires pool sizing and QA.

|

| • |

Static tracking numbers - Often used for offline placements; require careful listing decisions and monitoring.

|

| • |

Both - Common when you need strong website attribution and also want to distinguish offline/placement calls. |

Key takeaway: use the method that fits the patient pathway you’re trying to measure.

Minimum viable call tracking checklist

| • |

Choose a vendor and number strategy - Decide DNI for website, static numbers for offline/GBP, or both.

|

| • |

Install DNI correctly - Confirm it swaps on mobile and desktop and doesn’t break forms or accessibility.

|

| • |

Define routing rules - Location routing, after-hours routing, voicemail rules, and overflow handling.

|

| • |

Decide on recording and disclosures - If you record calls, use a compliant disclosure script for your jurisdictions and consult counsel for multi-state scenarios.

|

| • |

Set outcome capture - Ensure calls create/update episodes and dispositions get stamped consistently.

|

| • |

QA and acceptance checks - Test calls from multiple sources, confirm channel/source capture, confirm “unknown source” stays low at peak hours, and confirm outcomes reconcile to the PMS. |

Key takeaway: call tracking is only as good as routing, outcomes, and QA—not the dashboard.

NAP consistency: what to standardize and where it breaks

NAP issues usually show up in three places: your GBP listing, major directories/marketplaces, and data-aggregator-fed citations. The operational rule is simple: standardize your canonical practice name, address formatting, and primary phone number everywhere you control.

| • |

Where inconsistencies usually appear - GBP edits, old practice names, old phone numbers, suite formatting differences, duplicated directory listings.

|

| • |

What to standardize - One canonical name, one canonical address format, and one canonical primary phone number (plus documented additional numbers if used).

|

| • |

How tracking numbers can affect it - Introducing multiple numbers without governance can create duplicates, confuse patients, and complicate reconciliation; treat number strategy as a monitored tradeoff, not a set-and-forget tactic. |

Key takeaway: NAP is operational hygiene—small inconsistencies create big attribution and trust problems over time.

GBP phone number strategy is a tradeoff

| • |

Option A (SEO-first) - Keep the canonical number primary; accept less precise listing-call attribution; rely on website DNI and episodes.

|

| • |

Option B (attribution-first) - Use a tracking number where you need placement-specific call attribution and associate the canonical number as allowed; monitor local visibility, lead quality, and patient confusion signals.

|

| • |

Always test and monitor - Listing behavior and the visibility of additional numbers can vary by surface and device, so validate impact and adjust cautiously. |

Key takeaway: present number strategy as a decision with risks, not a universal rule.

DNI pool sizing: a repeatable sizing method

A practical sizing approach is to estimate peak concurrent sessions likely to call, then add buffer for reuse and segmentation.

| • |

Step 1 - Estimate peak concurrent website sessions during business hours (not daily average).

|

| • |

Step 2 - Multiply by the share of visitors likely to call (use your baseline call rate; if unknown, start conservative and adjust).

|

| • |

Step 3 - Add buffer for session length and reuse risk, and increase further if you segment by location or service line.

|

| • |

Step 4 - QA at peak hours with test calls from multiple sources and confirm the same number isn’t being reused too quickly. |

Key takeaway: pool sizing is not a one-time setup; it’s a capacity setting you validate.

DNI pool sizing worked example (illustrative)

If your busiest hour sees about 80 concurrent sessions and you estimate 3% of those sessions are likely to produce a call attempt, that’s 2.4 concurrent “call-likely” sessions. Add a buffer for longer sessions, reuse, and segmentation (for example, 3–4×), and you might target roughly 8–12 numbers for that segment. If you segment by two locations, you may need separate pools or a larger shared pool to avoid reuse bleed.

Key takeaway: the exact number depends on peak concurrency and segmentation, so validate with “unknown source” rates and peak-hour tests.

Pool health QA acceptance checks

| • |

Unknown/incorrect source rate - If a noticeable share of website calls are “unknown source” or misattributed during peak times, treat it as a pool/implementation issue to fix.

|

| • |

Reuse timing - If numbers appear to recycle quickly at peak, increase pool size or adjust session handling.

|

| • |

Source stability - Test a paid click and an organic click, then call from each and confirm the episode ledger captures correct channel/source. |

Key takeaway: attribution should reduce disputes; if it increases disputes, treat it as a measurement defect.

Call-only ads and calls that never hit the website

Some leads are phone-first by design: call-only ads, map calls, and voice-assistant driven calls may never produce a website session. Treat these as valid lead events captured through call tracking and the episode ledger.

| • |

How to classify - Use channel paid_search or gbp (based on the true entry point), and store source as the platform label you control (google_ads_call, google_lsa, google_business_profile).

|

| • |

How to reconcile - Stamp outcomes through the same ladder, and hold them to the same confidence checks as website-driven leads.

|

| • |

Common failure mode - Teams over-trust platform call conversions; the episode ledger prevents double-counting and connects calls to net collections. |

Key takeaway: phone-first leads are not “untrackable” if you treat calls as first-class episode events.

> Back to Table of Contents

Offline Conversion Uploads (Advanced but Common)

Offline uploads can help ad platforms optimize, but they do not replace episode-based ROI governance.

What platforms call this

You may see this described as “offline conversions,” “CRM import,” or “enhanced conversions” style workflows. The naming varies, but the concept is the same: you upload outcome signals back to the ad platform so it can learn what quality looks like.

Key takeaway: treat this as optimization plumbing, not the ROI ledger.

Identifiers you may see (when available)

In practice, uploads may rely on ad-platform identifiers captured during a click and carried forward to the conversion event. For Google Ads scenarios, that can include identifiers such as GCLID, GBRAID, or WBRAID when they exist and are captured. Phone-first journeys often have lower identifier capture rates.

Key takeaway: identifier capture is uneven by channel; don’t treat match rate as a “truth score” for ROI.

Minimum fields you need (conceptually)

At a minimum, uploads typically require:

- An identifier (when available)

- An event name that maps to your ladder definition (qualified, scheduled, started)

- A timestamp (with a consistent timezone policy)

Key takeaway: keep event names and timestamps policy-based so uploads don’t drift away from your ledger.

Minimal implementation outline (thin but complete)

| 1. |

Capture an identifier at click time when possible (on the landing page before handoffs).

|

| 2. |

Store it in first-party context long enough to survive the journey (and write it into the episode record once the lead is created).

|

| 3. |

Choose 1–3 upload events that map to your ladder (qualified, scheduled, started) with clear definitions and timestamps.

|

| 4. |

Upload the event with timestamp + identifier, and keep an audit trail linking upload records back to episode IDs.

|

| 5. |

Monitor match rate as a diagnostic metric: shifts often reflect handoff, consent, or channel-mix changes.

|

| 6. |

Reconcile uploaded events to episode outcomes so platform “wins” do not replace accounting truth. |

Key takeaway: uploads are for optimization directionally; the episode ledger stays the decision layer.

What events to upload first (and why)

Start with one to three events that are stable and operationally meaningful:

| • |

Qualified lead - Helps reduce spam/noise optimization if qualification is applied consistently.

|

| • |

Scheduled - Helps platforms learn which leads actually convert into appointments.

|

| • |

Started - Strong signal, but ensure your “started” definition is locked and applied consistently. |

Key takeaway: upload fewer events well before you upload many events inconsistently.

Common rejection reasons (and what to check)

If uploads fail or volume drops unexpectedly, common causes include:

- Timestamp formatting issues or timezone mismatches

- Missing or malformed identifiers

- Event names that don’t match expected values

- Consent or handoff changes that reduced identifier capture

Key takeaway: troubleshoot uploads like any other pipeline—test one journey end-to-end and verify the fields.

Why match rates drop (and what to check)

Match rates often depend on whether an identifier was captured, whether handoffs preserved it, and whether consent choices limited observable signals. Match rates can also shift when domains change, redirects are introduced, or call routing changes.

Mini troubleshooting checklist

| • |

Handoff change - Did a scheduler, portal, or redirect change remove identifiers before capture?

|

| • |

Consent change - Did consent settings change how tags behave or what can be stored?

|

| • |

Phone-first shift - Did volume move toward calls or map calls where click IDs are less available?

|

| • |

Field mapping drift - Did an upload field name, format, or timestamp rule change?

|

| • |

QA tests - Can you trace a single test journey from click → episode → outcome → upload record? |

Key takeaway: uploads improve optimization directionally; the episode ledger remains the accounting truth layer.

What success looks like

Success is directional: uploads help platforms learn which traffic is more likely to produce qualified, scheduled, or started outcomes. They rarely “solve attribution” and should not be used as the accounting basis for ROI. Reconcile uploads to episodes and net collections so partners can see one consistent truth layer.

A practical rule: keep the episode ledger as the accounting truth layer, and treat uploads as an optimization layer with documented scope and limits.

Key takeaway: uploads can help optimization, but they won’t settle ROI disputes without reconciliation.

> Back to Table of Contents

Multi-Location and Shared Spend

Multi-location reporting fails when definitions drift by office, patients cross over between locations, or shared budgets are allocated inconsistently.

Crossover edge cases (and a clean policy)

A common crossover scenario is: the patient calls Location A, schedules at Location B, treatment happens at B, and collections post under B. If you don’t define policy, teams argue about “who gets credit.”

| • |

Policy - Keep one episode, store inquiry_location and service_location, and credit net collections once to the episode.

|

| • |

Reporting - Show both locations in the partner report so leaders can see demand routing and capacity realities.

|

| • |

Governance - If cross-location scheduling is expected, treat it as an access strategy, not a tracking error. |

Key takeaway: crossover handling is primarily a governance problem, not an analytics problem.

Shared spend allocation examples (with numbers)

Choose one rule, document it, and stick with it long enough to interpret trends.

| • |

Even split - Example: $6,000 brand spend across 2 locations → $3,000 each. Best when volume is low or when brand is truly shared and location-level precision is false.

|

| • |

Starts-based - Example: $6,000 brand spend, Location A has 30 starts and Location B has 20 starts → allocate $3,600 to A and $2,400 to B. Best when starts are stable and comparable.

|

| • |

Qualified-leads-based - Example: $6,000 brand spend, A has 90 qualified and B has 60 qualified → allocate $3,600 to A and $2,400 to B. Best when scheduling capacity differs but qualification is consistent.

|

| • |

Guardrail - If allocations swing wildly due to tiny sample sizes, widen the evaluation window or present blended results to avoid overreaction. |

Key takeaway: allocation is about consistency and fairness, not false precision.

Reporting layout example (what you must be able to filter)

To prevent internal disputes, a multi-location report should be filterable by:

- inquiry_location and service_location

- service_line

- channel and source

- confidence tier

- constraints (availability, answer rate, speed-to-lead)

Key takeaway: location comparisons are only fair when you can see capacity and routing differences.

> Back to Table of Contents

Confidence Tiers and QA Cadence

Confidence tiers protect decisions and rebuild trust after “bad dashboard” experiences by making measurement quality visible.

Starting confidence tiers (then calibrate after 30 days)

| • |

High confidence - Stamping completeness ≥ 95% and QA pass rate ≥ 90%, with no unexplained outages.

|

| • |

Medium confidence - Completeness 85–94% or intermittent failures with known issues labeled and time-bounded.

|

| • |

Low confidence - Completeness < 85% or repeated QA failures that risk missing lead events. |

Key takeaway: low confidence is a systems alert, not a staff verdict.

QA cadence

| • |

Daily - Test form submission and verify lead creation and required fields.

|

| • |

Weekly - UTM click test, DNI test calls from two sources, dedup test, stamping completeness spot check, spam trend review.

|